-

Table of Contents

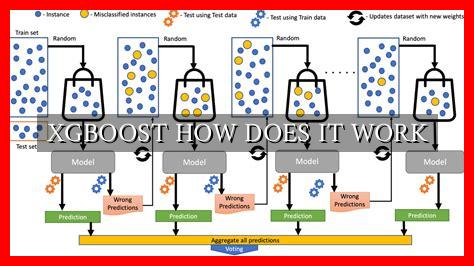

XGBOOST: How Does It Work?

XGBoost, short for Extreme Gradient Boosting, is a powerful machine learning algorithm that has gained popularity in recent years for its efficiency and effectiveness in handling structured data. In this article, we will delve into how XGBoost works, its key components, and why it is considered one of the top algorithms for predictive modeling.

Boosting Algorithm Overview

Boosting is an ensemble learning technique that combines multiple weak learners to create a strong learner.

. XGBoost is a specific implementation of the gradient boosting algorithm, which works by building a series of decision trees sequentially, with each tree correcting the errors of its predecessor.

Key Components of XGBoost

1. Decision Trees

XGBoost uses decision trees as base learners, where each tree makes predictions on the target variable. The algorithm then combines the predictions of all trees to make the final prediction.

2. Gradient Boosting

Gradient boosting involves optimizing a loss function by iteratively adding new models to minimize the error. XGBoost uses gradient descent to minimize the loss function, making it more efficient and accurate.

3. Regularization

XGBoost incorporates regularization techniques to prevent overfitting and improve generalization. Regularization parameters control the complexity of the model and help avoid high variance.

How XGBoost Works

When training an XGBoost model, the algorithm goes through the following steps:

- Initialize the model with a constant value.

- Iteratively build decision trees to minimize the loss function.

- Add new trees to correct the errors of the previous trees.

- Regularize the model to prevent overfitting.

Benefits of XGBoost

XGBoost offers several advantages that make it a popular choice for machine learning tasks:

- Highly efficient and scalable for large datasets.

- Handles missing values and outliers well.

- Provides feature importance scores for interpretability.

- Supports parallel processing for faster training.

Case Study: Predicting Customer Churn

Let’s consider a real-world example of using XGBoost to predict customer churn for a telecom company. By analyzing customer data such as usage patterns, demographics, and customer service interactions, XGBoost can accurately predict which customers are likely to churn.

By leveraging XGBoost’s ability to handle complex relationships in the data and its regularization techniques to prevent overfitting, the telecom company can proactively target at-risk customers with retention offers, ultimately reducing churn rates and improving customer satisfaction.

Conclusion

XGBoost is a powerful machine learning algorithm that excels in handling structured data and producing accurate predictions. By leveraging decision trees, gradient boosting, and regularization techniques, XGBoost can effectively model complex relationships in the data and prevent overfitting.

Whether you are working on a classification, regression, or ranking problem, XGBoost is a versatile algorithm that can deliver impressive results. Its efficiency, scalability, and interpretability make it a top choice for data scientists and machine learning practitioners.

For more information on XGBoost, you can visit the official XGBoost documentation.