-

Table of Contents

- Learning to Optimize Tensor Programs

- Understanding Tensor Programs

- Challenges in Optimizing Tensor Programs

- Strategies for Optimizing Tensor Programs

- 1. Algorithmic Optimization

- 2. Data Layout Optimization

- 3. Parallelism and Concurrency

- Case Study: Optimizing Convolutional Neural Networks

- Conclusion

Learning to Optimize Tensor Programs

Tensor programs are becoming increasingly popular in various fields such as machine learning, scientific computing, and data analysis due to their ability to efficiently handle multi-dimensional data. However, optimizing tensor programs can be a challenging task that requires a deep understanding of the underlying algorithms and hardware architecture.

. In this article, we will explore some strategies for learning to optimize tensor programs effectively.

Understanding Tensor Programs

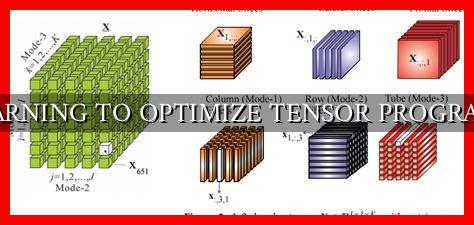

Before diving into optimization techniques, it is essential to have a clear understanding of what tensor programs are and how they work. A tensor is a multi-dimensional array that can represent complex data structures such as images, videos, and neural network weights. Tensor programs involve performing operations on these tensors, such as matrix multiplication, convolution, and element-wise operations.

Challenges in Optimizing Tensor Programs

Optimizing tensor programs can be challenging due to the high dimensionality of tensors and the complexity of the operations involved. Some common challenges include:

- Memory access patterns

- Parallelism and concurrency

- Optimizing for specific hardware architectures

Strategies for Optimizing Tensor Programs

There are several strategies that can be employed to optimize tensor programs effectively:

1. Algorithmic Optimization

One of the first steps in optimizing tensor programs is to choose the right algorithms and data structures. For example, using efficient matrix multiplication algorithms such as Strassen’s algorithm can significantly improve performance.

2. Data Layout Optimization

Optimizing the data layout of tensors can have a significant impact on performance. For example, using row-major or column-major layout can improve memory access patterns and cache efficiency.

3. Parallelism and Concurrency

Utilizing parallelism and concurrency techniques such as multi-threading, SIMD instructions, and GPU acceleration can help speed up tensor operations. Tools like TensorFlow and PyTorch provide built-in support for parallel execution.

Case Study: Optimizing Convolutional Neural Networks

Convolutional neural networks (CNNs) are widely used in image recognition tasks and involve complex tensor operations. By optimizing the data layout, using parallel processing, and leveraging hardware accelerators such as GPUs, researchers have been able to achieve significant speedups in CNN training and inference.

Conclusion

Optimizing tensor programs is a complex but rewarding task that can lead to significant performance improvements in various applications. By understanding the underlying algorithms, data structures, and hardware architecture, developers can effectively optimize tensor programs for maximum efficiency.

For further reading on this topic, check out this guide on optimizing tensor programs with TensorFlow XLA.